In the last post, we generated our first Random Forest model with mostly default parameters so that we could get an idea of how important the features are. From that we can further reduce the dimensionality of our data set by throwing out some arbitrary amount of the weakest features. We could continue experimenting with the threshold with which to remove “weak” features, or even go back and experiment with the correlation and PCA thresholds as well to modify how many parameters we end up with… but we’ll move forward with what we’ve got.

Now that we’ve got our final data set, we can begin the process of hyperparameter optimization: finding the best set of parameters to use for our model. This is easier for Random Forest than most models, because there are fewer parameters and they’re less critical to get exactly right to obtaining good classification performance. However, the process we use here is applicable for all models in scikit-learn (and more generally all models in other frameworks).

Scikit-learn has two methods for performing hyperparameter optimization which are almost identical to implement. One is a GridSearch and the other is RandomizedSearch. In both cases, you specify a list of potential values of each parameter you’d like to test. You feed this list to the search and it will execute the model with combinations of the values specified. For GridSearch, it tests every single possible combination of parameters. RandomizedSearch allows you to specify how many different combinations you’d like to test and then it randomly selects combinations to try. As you can imagine, If you are working with a model with a LOT of critical parameters, and/or parameters could optimally be set at a wide range of values, a RandomizedSearch can be super useful to help save time. The following code will demonstrate both approaches, as well as some code that reports the results of the search

Here’s a look at the code:

# Utility function to report optimal parameters

# (adapted from http://scikit-learn.org/stable/auto_examples/randomized_search.html)

def report(grid_scores, n_top=5):

params = None

top_scores = sorted(grid_scores, key=itemgetter(1), reverse=True)[:n_top]

for i, score in enumerate(top_scores):

print("Parameters with rank: {0}".format(i + 1))

print("Mean validation score: {0:.4f} (std: {1:.4f})".format(

score.mean_validation_score, np.std(score.cv_validation_scores)))

print("Parameters: {0}".format(score.parameters))

print("")

if params == None:

params = score.parameters

return params

# The most common value for the max number of features to look at in each split is sqrt(# of features)

sqrtfeat = np.sqrt(X.shape[1])

# Simple grid test (162 combinations)

grid_test1 = { "n_estimators" : [1000, 2500, 5000],

"criterion" : ["gini", "entropy"],

"max_features" : [sqrtfeat-1, sqrtfeat, sqrtfeat+1],

"max_depth" : [5, 10, 25],

"min_samples_split" : [2, 5, 10] }

# Large randomized test using max_depth to control tree size (5000 possible combinations)

random_test1 = { "n_estimators" : np.rint(np.linspace(X.shape[0]*2, X.shape[0]*4, 5)).astype(int),

"criterion" : ["gini", "entropy"],

"max_features" : np.rint(np.linspace(sqrtfeat/2, sqrtfeat*2, 5)).astype(int),

"max_depth" : np.rint(np.linspace(1, X.shape[1]/2, 10),

"min_samples_split" : np.rint(np.linspace(2, X.shape[0]/50, 10)).astype(int) }

# Large randomized test using min_samples_leaf and max_leaf_nodes to control tree size (50k combinations)

random_test2 = { "n_estimators" : np.rint(np.linspace(X.shape[0]*2, X.shape[0]*4, 5)).astype(int),

"criterion" : ["gini", "entropy"],

"max_features" : np.rint(np.linspace(sqrtfeat/2, sqrtfeat*2, 5)).astype(int),

"min_samples_split" : np.rint(np.linspace(2, X.shape[0]/50, 10)).astype(int),

"min_samples_leaf" : np.rint(np.linspace(1, X.shape[0]/200, 10)).astype(int),

"max_leaf_nodes" : np.rint(np.linspace(10, X.shape[0]/50, 10)).astype(int) }

forest = RandomForestClassifier(oob_score=True)

print "Hyperparameter optimization using GridSearchCV..."

grid_search = GridSearchCV(forest, grid_test1, n_jobs=-1, cv=10)

grid_search.fit(X, y)

best_params_from_grid_search = scorereport.report(grid_search.grid_scores_)

print "Hyperparameter optimization using RandomizedSearchCV with max_depth parameter..."

grid_search = RandomizedSearchCV(forest, random_test1, n_jobs=-1, cv=10, n_iter=100)

grid_search.fit(X, y)

best_params_from_rand_search1 = scorereport.report(grid_search.grid_scores_)

print "...and using RandomizedSearchCV with min_samples_leaf + max_leaf_nodes parameters..."

grid_search = RandomizedSearchCV(forest, random_test2, n_jobs=-1, cv=10, n_iter=500)

grid_search.fit(X, y)

best_params_from_rand_search2 = scorereport.report(grid_search.grid_scores_)

In this example, we run a couple of different types of searches. You’ll notice the difference in the parameter sets for the randomized search – this is a result of implementation of scikit-learn’s random forest. The max_depth parameter is ignored if either the min_samples_leaf or max_leaf_nodes parameters are set, so we essentially perform two different tests. If you just threw the max_depth parameter in with all the others, you would unknowingly not ever test the depth parameter and you would waste time on redundant parameter combinations. This brings up an important point – you need to get familiar with all the parameters of whatever model type you’re optimizing to make sure you’re covering all the use cases and configurations that are possible. I’m not sure if there is a way to feed the RandomizedSearchCV multiple groupings of parameters to test together (to cover the case I’ve just described above with incompatible parameters) but I wasn’t able to determine if that’s possible. If anyone knows of a way to do that, please leave a comment!

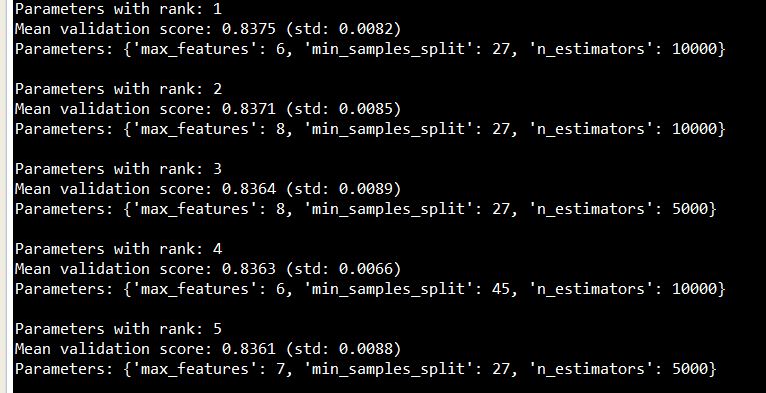

Here’s an example of what the output would look like (the parameter values don’t exactly match what I’ve got above, but same idea!)

So, now we’ve got everything in place to search for optimal parameters for the model. In the next couple posts we’ll take a look at different ways that we can validate our model to make sure that we’re not clearly overfitting or underfitting. The cross validation in the hyperparameter optimization and OOB estimates inherent in Random Forests are a good start, but there’s a few other powerful mechanisms we can use for validation. We’ll start with Learning Curves next time

Kaggle Titanic Tutorial in Scikit-learn

Part I – Intro

Part II – Missing Values

Part III – Feature Engineering: Variable Transformations

Part IV – Feature Engineering: Derived Variables

Part V – Feature Engineering: Interaction Variables and Correlation

Part VI – Feature Engineering: Dimensionality Reduction w/ PCA

Part VII – Modeling: Feature Importance

Part VIII – Modeling: Hyperparamter Optimization

Part IX – Validation: Learning Curves

Part X – Validation: ROC Curves

Part XI – Summary